Every Studio

Lessons from engineers shipping AI products.

From Our Columnists

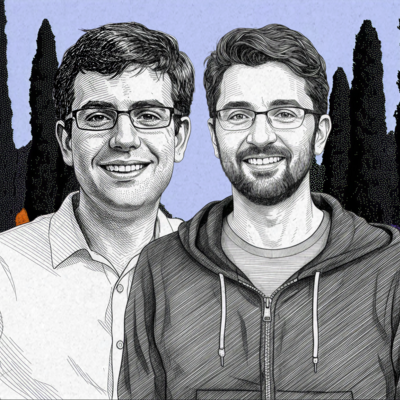

Dan Shipper

Dan Shipper is the CEO and cofounder of Every. Every week he explores the frontiers of AI in his column, Chain of Thought, and on his podcast, ‘AI & I.’

Read more from Dan

Read more from Dan

Podcast

AI & I

Every week, Dan sits down with the smartest people in tech and explores the possibilities of AI together.

Latest episodes

Latest episodes

.png)

.png)

.png)

.png)